I’ve been reading about the Nano Banana Model and I’m struggling to understand how it actually works in real-world applications. Most explanations I find are either too basic or overly technical, and I can’t connect the theory to practical use cases. Can someone break it down in simple terms, explain its main components, and share examples of where it’s used and why it matters?

Nano Banana Model is one of those names that sounds like a meme but it actually describes a pretty specific pattern people use in practice. Think of it less like some magical algorithm and more like a workflow for turning messy inputs into something you can actually use in a system.

The usual breakdown people talk about goes like this:

-

Nano level

That’s the tiny, atomic stuff. In real-world use this is where you deal with small, well defined units of data or behavior.

Examples:- Single user actions in an app

- One purchase event

- One sentence or short text chunk in NLP

- One sensor reading in IoT

At this level you:

- Clean the data

- Normalize it

- Add metadata or context

- Maybe run a small model on it to classify or tag it

-

Banana level

This is where you group a bunch of those nano pieces into something useful and human-sized.

Examples:- A user session made of multiple clicks

- A full customer profile made of purchases, visits, support tickets

- A full document made from many sentences

Here you:

- Aggregate nano events into meaningful chunks

- Extract features like “conversion likelihood”, “churn risk”, “topic of this document”

- Run higher level models that need context, not just one tiny datapoint

-

Model part

The point is not “new math” but structuring your system around these two scales:- Low level = very accurate, very fast, very specific

- Higher level = slower, more contextual, more strategic

So the “model” is often:

- A pipeline of micro models at nano level

- One or more models at banana level that use the nano outputs

How it looks in real products

Example 1: E–commerce recommendations

- Nano:

- Every page view, click, add to cart is a nano event

- You tag each with product category, time, device, campaign etc

- Banana:

- Group those events into a “session” or into “user history”

- Compute things like: favorite categories, price sensitivity, usual time to buy

- Model:

- Quick nano model says “this click looks like window shopping vs serious intent”

- Slower banana model says “this user is 80 percent likely to buy electronics this week” and triggers recommendations or discounts

Example 2: Customer support triage

- Nano: individual messages or tickets

- Banana: whole conversation threads and user history

- Nano models: classify sentiment, language, urgency on each message

- Banana model: decide escalation, assign priority, predict churn or upsell potential based on the full convo + history

Example 3: AI / LLM workflows

This is where people quietly use a “Nano Banana” structure without naming it.

- Nano:

- Chunk a long document into small passages

- Embed each chunk, detect entities, label them

- Banana:

- Use those embeddings and labels to answer long questions, generate summaries, or search across the whole document set

Nano = “treat each chunk on its own”

Banana = “reason over all of them together with more context”

Why people like it

- You get scalability because nano processing can be parallelized like crazy.

- You get interpretability because you can trace “this final decision came from these nano events aggregated this way”.

- You get flexibility because changing the banana logic does not require reinventing all the nano parts.

Concrete mental model

Think of building with Lego:

- Each brick = nano

- The house or car = banana

- The building instructions = the model that defines how nanos combine to form bananas, and which bananas trigger which actions.

When someone throws formulas at you, mentally map them to:

f_nano(event)for each little thingg_banana(aggregate_of_events)for the grouped stuff- Then some decision or prediction comes from

g_banana.

Actual “real world” example you might feel

If you’re doing user-facing tools or content stuff:

- Nano:

- Every photo, message, or text block a user uploads

- Tag with quality, style, content, safety

- Banana:

- Whole profile or project for that user

- Then decide which template, which suggestions, or which automation to run

In that context, apps that turn lots of raw assets into an output use this same pattern. For instance, iOS tools that generate portraits or avatars usually:

- Analyze each individual photo first (nano)

- Then combine multiple photos plus user preferences to build a consistent profile and final output (banana)

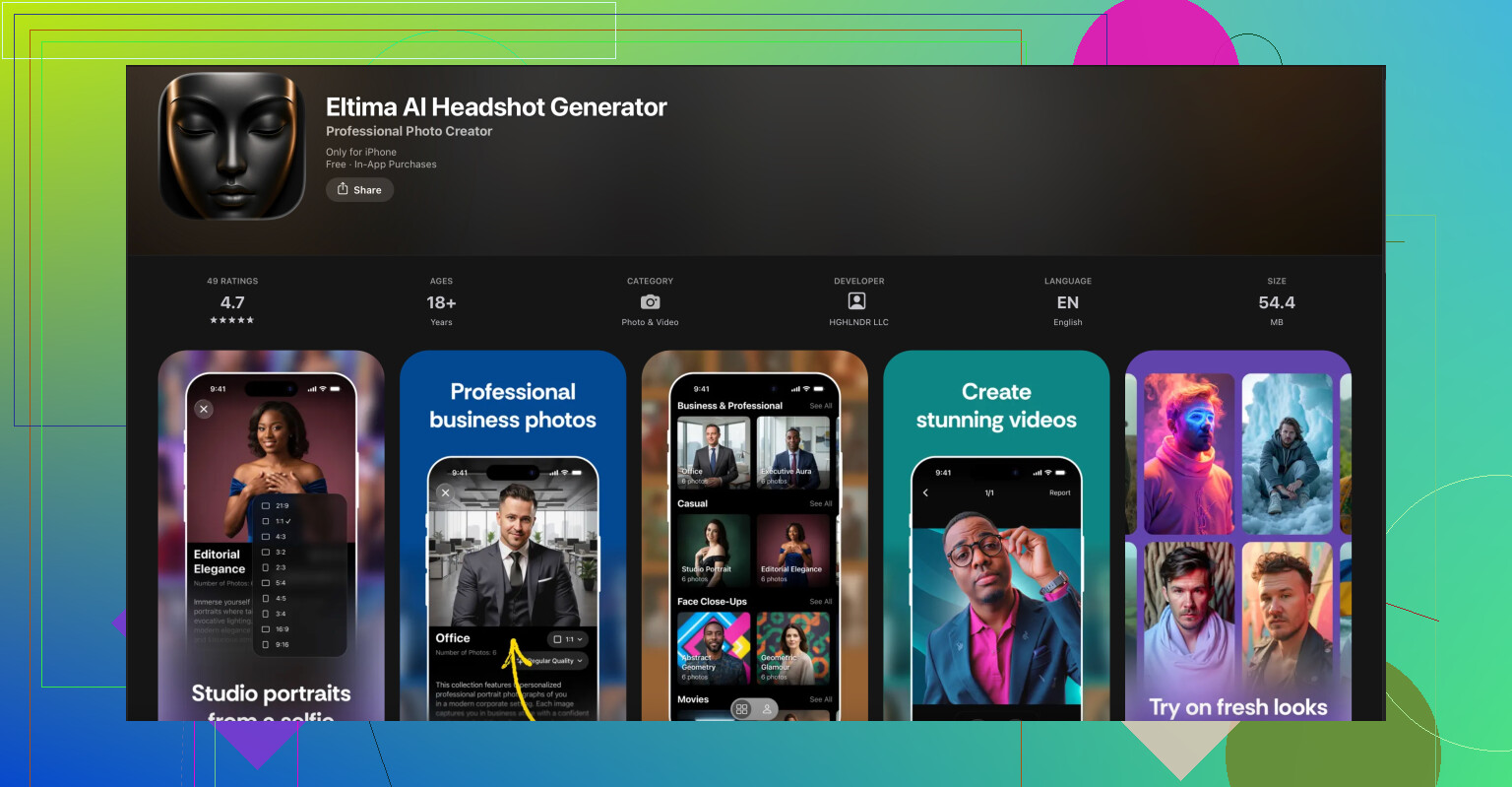

If you mess with visuals at all, a simple example is something like the Eltima AI Headshot Generator app for iPhone. Internally it follows a similar layered idea: it uses small bits of face data and style info, then aggregates them to produce coherent, studio style headshots. If you want to see this kind of pipeline “in the wild” for images, check this out:

create professional AI headshots on your iPhone

You are not going to see “Nano Banana Model” in its UI, but the conceptual structure is very similar.

If you drop what specific domain you care about (ML, UX analytics, marketing, etc), people can throw more concrete Nano vs Banana examples that match what you’re trying to build.

You’re getting tripped up because “Nano Banana Model” sounds like a research paper, but in practice it’s mostly an architecture pattern for systems, not a single algorithm you “run.”

@caminantenocturno covered the nano vs banana breakdown pretty well, so I’ll try to fill the gaps they didn’t hit and also push back on one point.

They framed it as:

- Nano = tiny units

- Banana = grouped units

- Model = how you connect them

That’s useful, but the part that usually makes it click in real life is: where do decisions happen, and who owns what?

1. The missing piece: decision boundaries

In actual products, “Nano Banana” is mostly about deciding:

- What do I decide immediately, locally, per nano thing?

- What do I postpone until I see the bigger banana?

Concrete:

- Fraud detection

- Nano: for each transaction, you do a quick risk score.

- Banana: across a week of transactions, you decide “freeze the card / call customer / allow but watch.”

If you try to push everything into the banana level, your system feels slow and overcomplicated.

If you try to keep everything nano-only, you miss patterns that only show up over time.

So the real “model” is: what must be decided now, and what can wait until we have more context?

2. Where I slightly disagree with @caminantenocturno

They said it’s not “new math,” which is fair, but in practice the math you pick often changes between levels:

-

Nano level tends to use:

- Simple classifiers

- Rules, thresholds, finite state machines

- Tiny models that are cheap and highly reliable

-

Banana level:

- Sequence models

- Graphs, Markov-style transitions

- LLMs or heavy models that reason over a whole sequence/history

So it’s not just a workflow, it actually influences what kind of models you choose per layer. If you treat it as “just a pipeline name,” you might miss that the constraints differ hard between nano and banana.

3. A very concrete mental mapping for you

Try this simple template and plug in whatever domain you care about:

-

Define your nano event:

“A single X”- Click, message, sensor reading, frame of a video, photo, log line

-

Decide the nano decisions you can safely make per event:

- Is this spam?

- Is this unsafe content?

- What category does this belong to?

-

Define what makes a banana unit in your world:

- One user’s session

- One project

- One conversation

- One day’s worth of sensor data

-

Decide banana decisions that must look across many events:

- Is this user likely to churn?

- Is this machine failing soon?

- Is this conversation resolved or escalating?

-

Then connect them:

- Banana model only sees: “user’s list of nano tags / scores / events”

- It does not need the raw chaos; nano already tamed that.

If you can fill in those 5 blanks for your use case, you basically understand the Nano Banana Model in practical terms.

4. A visual / content example that’s actually in the wild

Since you mentioned “real world” and not just theory, here’s how this structure quietly shows up in creative / AI tools.

Take AI headshot apps on iPhone-type workflows:

-

Nano level

- Each uploaded photo is processed:

- Face detection

- Quality score

- Style tags (lighting, angle, expression)

- Safety checks

- That’s all nano processing. Fast, parallel, per-image.

- Each uploaded photo is processed:

-

Banana level

- The system builds a user profile from multiple photos:

- Stable facial features

- Consistent style preferences

- Chosen output type (corporate, casual, creative)

- Then it decides: what final style, backgrounds, angles make sense for this person as a whole, not just this one image.

- The system builds a user profile from multiple photos:

That is exactly a Nano Banana pipeline in action: lots of micro per-image processing first, then an aggregated decision over the whole “banana” of user photos.

If you want to see that kind of layered pipeline in practice rather than reading theory, using something like the Eltima AI Headshot Generator app for iPhone is actually pretty instructive. You give it multiple photos, it quietly runs nano-level analysis on each, then uses the aggregated banana-level profile to output consistent, studio-quality portraits. You don’t see the internals, but conceptually it’s a textbook example.

Here’s an App Store link if you want to poke at how such a system feels from the outside:

create realistic AI headshots on your iPhone

Play with it and mentally trace: “What are the nanos it must be computing? What’s the banana decision it clearly has to make to keep all results consistent?”

5. How to apply this without overthinking it

When you read a paper or blog post about the Nano Banana Model and it sounds too abstract, translate it to:

- What’s one small thing they repeatedly process? That’s nano.

- How do they bundle those into something that maps to a user, session, device, or document? That’s banana.

- Where do they actually decide or predict something meaningful? That’s the banana model using nano outputs.

If you want, drop your specific domain (e.g. “marketing analytics” or “industrial IoT” or “LLM agents”), and it’s possible to sketch a nano/banana design for that exact scenario so it doesn’t feel like theory floating in the void.